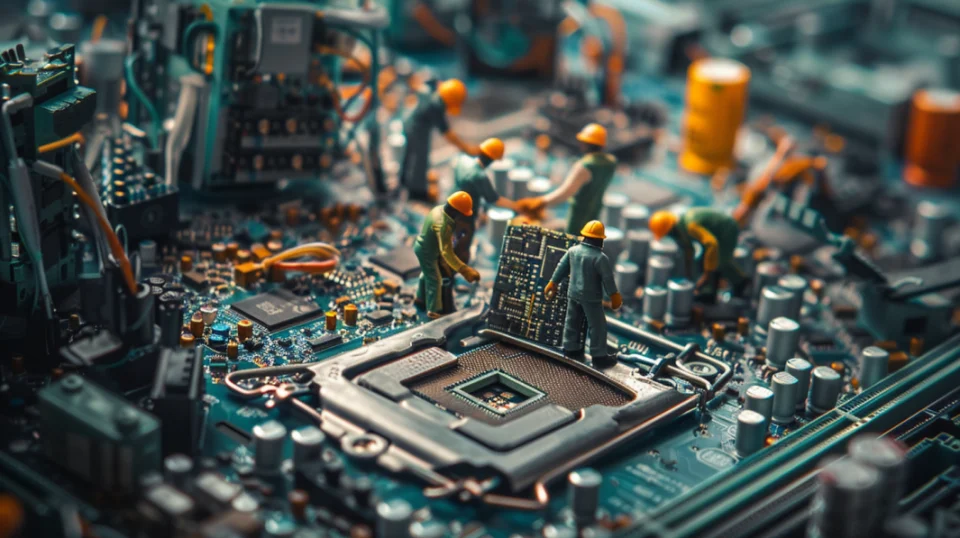

How to update your PC BIOS

Content: Introduction Accessing the BIOS from Windows Alternatives to using the Windows 10 and 11 method What does UEFI stand for? When should you update your BIOS? How to update your BIOS BIOS Update Considerations Every computer has its BIOS, short for Basic...