In the monitoring sector there are several key aspects that IT administrators need to keep an eye on, namely: communications, servers and applications. All three are essential for a company’s services to function and a critical problem in any of them can be damaging. This article focuses on application monitoring, looking at the needs, technologies, services and other key points. Applications are always found on a server, whether a PC that supports a college’s Intranet, all the way up to machine supporting a multinational company’s virtualization services. All this makes server monitoring, of hardware and resources, a key area to cover in order to have your applications under control.

The second key point would be application monitoring “from the inside”, analyzing each technology, the resources it needs, the processes and services, dependencies and internal functioning, and is the most complicated part as it will be different for each application or technology used. Let’s take a look at the different ways we can get this information

Remote monitoring

The first option on the table would be to obtain the information remotely, that is, without installing any software on the server where the application is running. It’s done by running remote checks over the network using one of the different protocols available.

ICMP

We can start by checking if the server is up and available, via availability checks and ICMP latency. This way we’ll know at any time if the server crashes or disconnects, or if there is an incident in the network that is slowing down response time, provoking a degradation of service. The most typical case involves creating a “Host Alive” check via a ping to the server to check that everything’s OK, and using the same ping to create a “Host Latency” check in order to detect any possible latency issues on the network.

SNMP

If the server supports SNMP, this would be another way to get detailed information about the system’s resources or any problems with your machines, stress points, bottlenecks, CPU use or server memory, etc. One of the most widely deployed protocols for monitoring networks.

WMI

If you want to monitor a Windows application server remote WMI checks will do just fine. This Microsoft protocol remotely retrieves practically all possible data, from resources status (memory, CPU, disc space…) to processes and services. You need to provide OS credentials to run the checks. In a real environment we could obtain services in execution for monitoring applications, such as Exchange services.

Technology-specific protocols

This is the most complex order on our application monitoring menu. Tech-specific application monitoring won’t allow to use your general services and processes monitoring knowledge. Instead you have to know the app inside-out and preferably back-to-front, how it works, how it packages and sends the data, if it’s listening at any port, if it’s compatible with any monitoring you’ve deployed over its dependent applications, etc.

A common case would be to remotely monitor port 80 on your web server to see if it’s listening in, and in parallel to check availability and load times on websites. Another case would be to check port 3306 to see if the MySQL server is running. You can also run more advanced checks, such as security checks, remotely from IPs where MySQL is listening in, and waiting for connections, or also from another unauthorized IP in order to get a negative reply.

In most cases the applications have their own command line interfaces to which you can remotely connect to execute commands and get information. The challenge resides in harvesting this information from outside the specific command line interface; in some cases you can get around this by launching remote requests without accessing the command line interface, but it isn’t always so simple.

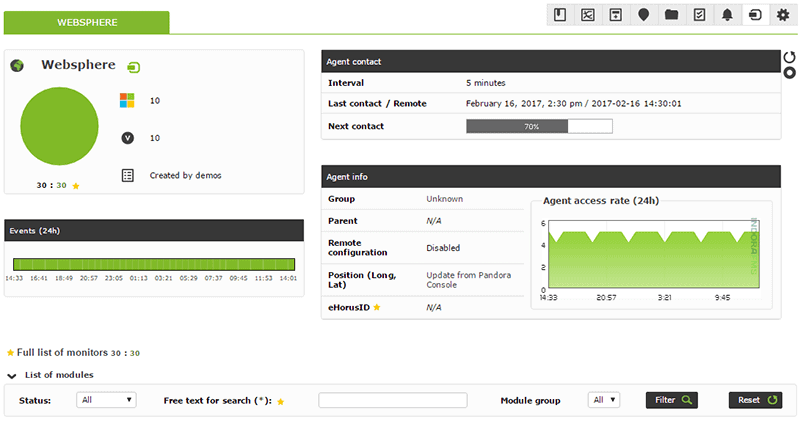

Local monitoring

Or agent-based monitoring, consisting of installing a small piece of software on your server which runs along in the background, and collects and sends data at a set time. This system allows data to be retrieved from the applications themselves.

Normally, specific credentials are required to access applications and retrieve information, and it’s always going to be simpler to extract the data and express it in modules rather than via remote monitoring. Recovering this kind of fine detail data requires some skill in the applications you want to monitor, although the results are going to be better than those obtained through remote monitoring.

Pros and cons

Remote monitoring

Pros:

- Quick deployment.

- Minimal consumption of resources.

- Data is extracted from outside your network, guaranteeing good service for both clients and users.

Cons:

- Not possible on all applications.

- Communications-related incidents can affect the data.

- It’s more difficult to harvest the data cleanly and clearly.

Local monitoring

Pros:

- More detail.

- The info comes from the application side, without having to go through external channels.

- You can be more proactive in the actions you execute, in function of the data you get.

Cons:

- Requires software to be installed, impacting your resources.

- Slower deployment.

- Decentralized information collecting.

In the final analysis, different variables come into play, meaning that, depending on your infrastructure, demands and capacity, one method or the other will be preferable. If you find yourself having to make a choice between local or remote application monitoring, best to implement a study, beginning with the three categories cited above to help you decide on the optimal method.

Pandora FMS’s editorial team is made up of a group of writers and IT professionals with one thing in common: their passion for computer system monitoring. Pandora FMS’s editorial team is made up of a group of writers and IT professionals with one thing in common: their passion for computer system monitoring.